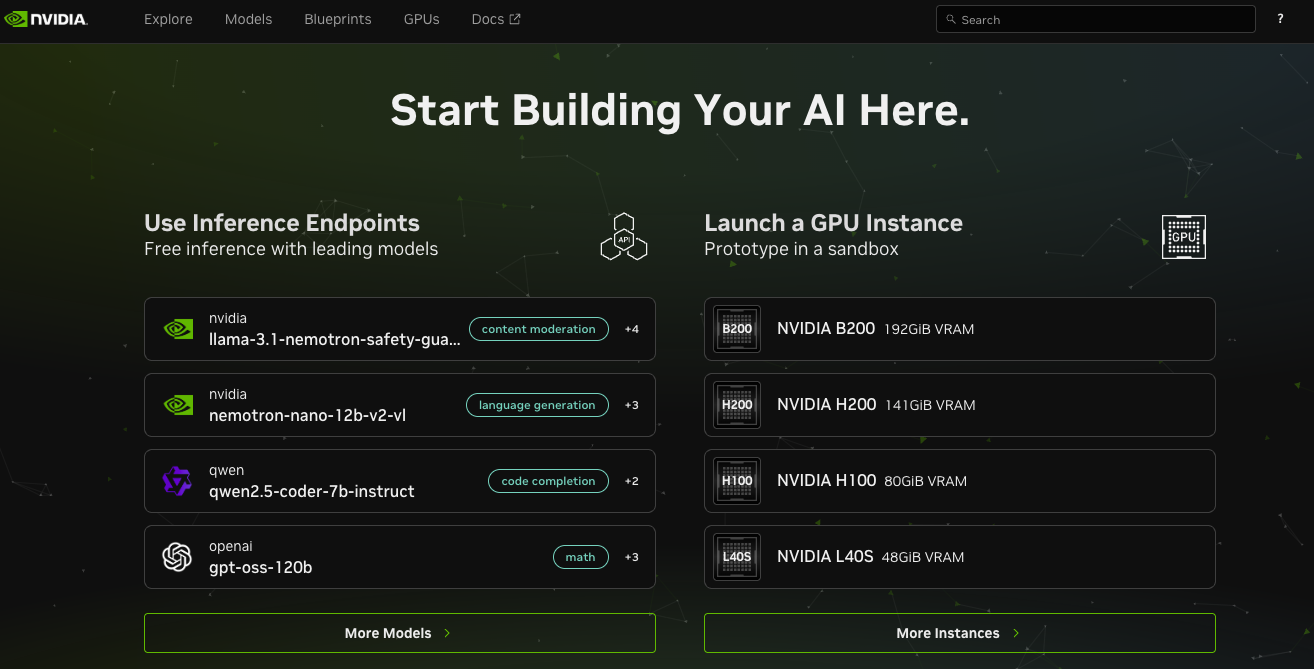

Introduction to the NVIDIA AI Ecosystem¶

- NVIDIA provides a full-stack platform for AI training, inference, and end-to-end acceleration

- In real-world environments, NVIDIA tools reduce:

- training time

- inference latency

- GPU costs (through better utilization)

- operational complexity (drivers, dependencies, scheduling)

- The ecosystem is built for production environments such as:

- LLM API services

- multimodal applications

- edge/latency-sensitive workloads

- enterprise-scale ML pipelines

NVIDIA AI Ecosystem, Ref. build.nvidia.com

NVIDIA AI Ecosystem, Ref. build.nvidia.com

NVIDIA GPU Hardware for AI¶

- NVIDIA GPUs accelerate the tensor operations required for deep learning

- In practice, each GPU family maps to specific use cases:

| GPU Model | Key Characteristics | When to Use | Typical Workloads |

|---|---|---|---|

| H100 / H200 | Hyperscaler-grade GPUs for massive-scale AI | - Training 70B–400B LLMs - High-QPS inference with continuous batching - Multi-node distributed training (NVLink / NVSwitch) - DGX clusters or cloud GPU pods |

- Training frontier LLMs - High-throughput LLM serving (TensorRT-LLM, vLLM) - Enterprise-scale distributed training |

| A100 | Extremely versatile for both training & inference | - Fine-tuning Llama-3/7B–70B - Vision foundation models - Cost-efficient production ML workflows |

- Batch inference pipelines - Vision & multimodal models - General-purpose ML workloads |

| L40 / L40S | Strong balance of training and inference performance | - Image/video models (diffusion, ViT, generative models) - Medium-scale LLM inference - On-prem enterprise GPU servers |

- SDXL / diffusion models - 7B–13B LLM inference - Multimodal pipelines |

| RTX 4000 / RTX 6000 Ada | Workstation and edge-friendly high-performance GPUs | - Edge AI clusters - On-prem inference nodes - Developer workstations |

- 7B–13B LLM inference (q4/q8) - SDXL image generation - RAG/embeddings pipelines |

| RTX 6000 Pro Blackwell Server Edition | Inference-only Blackwell architecture optimized for efficiency | - LLM API serving with FP4/FP8 - High-concurrency token generation - Low-power inference clusters |

- Cost-optimized LLM serving - 24/7 production APIs - Large distributed inference fleets |

CUDA Platform¶

- CUDA provides the execution layer for all GPU-accelerated workloads

- Why it's practical:

- Every major ML framework (PyTorch, TensorFlow, JAX) compiles down to CUDA kernels

- Custom operations (FlashAttention, RoPE kernels, CUDA Graphs) rely on CUDA

- Production inference frameworks (TensorRT, vLLM, Triton) require CUDA support

- CUDA version management is critical:

- Mismatched CUDA ↔ driver versions lead to runtime failures

- GPU Operator simplifies this in Kubernetes

TensorRT and Inference Optimization¶

- TensorRT is commonly used to optimize and deploy models in production

- Real-world benefits:

- Up to 2×–6× throughput improvements

- Reduced GPU memory usage

- Lower latency → better user experience

- Practical examples:

- Quantizing FP16 → FP8 or FP4 for LLMs

- Fusing attention kernels

- Converting PyTorch models to ONNX → TensorRT for deployment

- Used heavily in:

- self-hosted chatbots

- diffusion pipelines

- streaming / real-time inference

- enterprise GPU inference clusters

NVIDIA Triton Inference Server¶

- Production inference server used widely across cloud/edge

- Practical reasons teams adopt it:

- One server supports multiple frameworks (PT, TF, ONNX, TensorRT, vLLM)

- Built-in dynamic batching increases throughput automatically

- Model versioning simplifies CI/CD

- Ensemble models can combine preprocessing → model → postprocessing

Typical Triton deployment patterns¶

- REST / gRPC endpoints for online inference

- Autoscaled Kubernetes deployments

- Multi-model serving (LLM + embedding + reranker on same GPU)

- Token streaming for LLM inference (via TensorRT-LLM or vLLM backend)

NVIDIA NIM¶

- NIM provides ready-to-deploy microservices for:

- embedding models

- Llama-family LLMs

- document parsing / OCR

- vision models

- Benefits for engineering teams:

- No manual environment setup

- Standardized, versioned container images

- Works well for trial, PoC, or hybrid-cloud integration

GPU Management in Kubernetes¶

GPU Operator¶

- Automates installation of:

- NVIDIA drivers

- CUDA libraries

- container runtime integration

- DCGM monitoring agents

- Essential for stable production clusters

Device Plugin¶

- Exposes GPU resources to the scheduler

- Required for:

- GPU sharing

- MIG partitions

- guaranteed resource allocation

DCGM Exporter¶

- Monitoring agent for:

- GPU utilization

- memory usage

- power consumption

- temperature

- Integrated with Prometheus → Grafana dashboards

- Detects early performance issues (thermal throttling, OOM, underutilization)

Why the NVIDIA Ecosystem Matters¶

- Provides an end-to-end production stack from hardware → runtime → model server

- Reduces engineering overhead:

- no manual driver installs

- no dependency conflicts

- no custom distributed training kernels

- Enables practical workloads:

- real-time LLM serving

- high-throughput batch inference

- multimodal systems

- RAG pipelines

- enterprise-ready ML services

In short:

NVIDIA delivers a stable, optimized, and battle-tested platform for real-world AI training and inference.