Why GPUs Matter for AI

- Deep learning = massive matrix/tensor operations

- Requires highly parallel compute

- GPUs provide thousands of cores, high memory bandwidth, and AI accelerators

- Backbone of modern AI training and inference

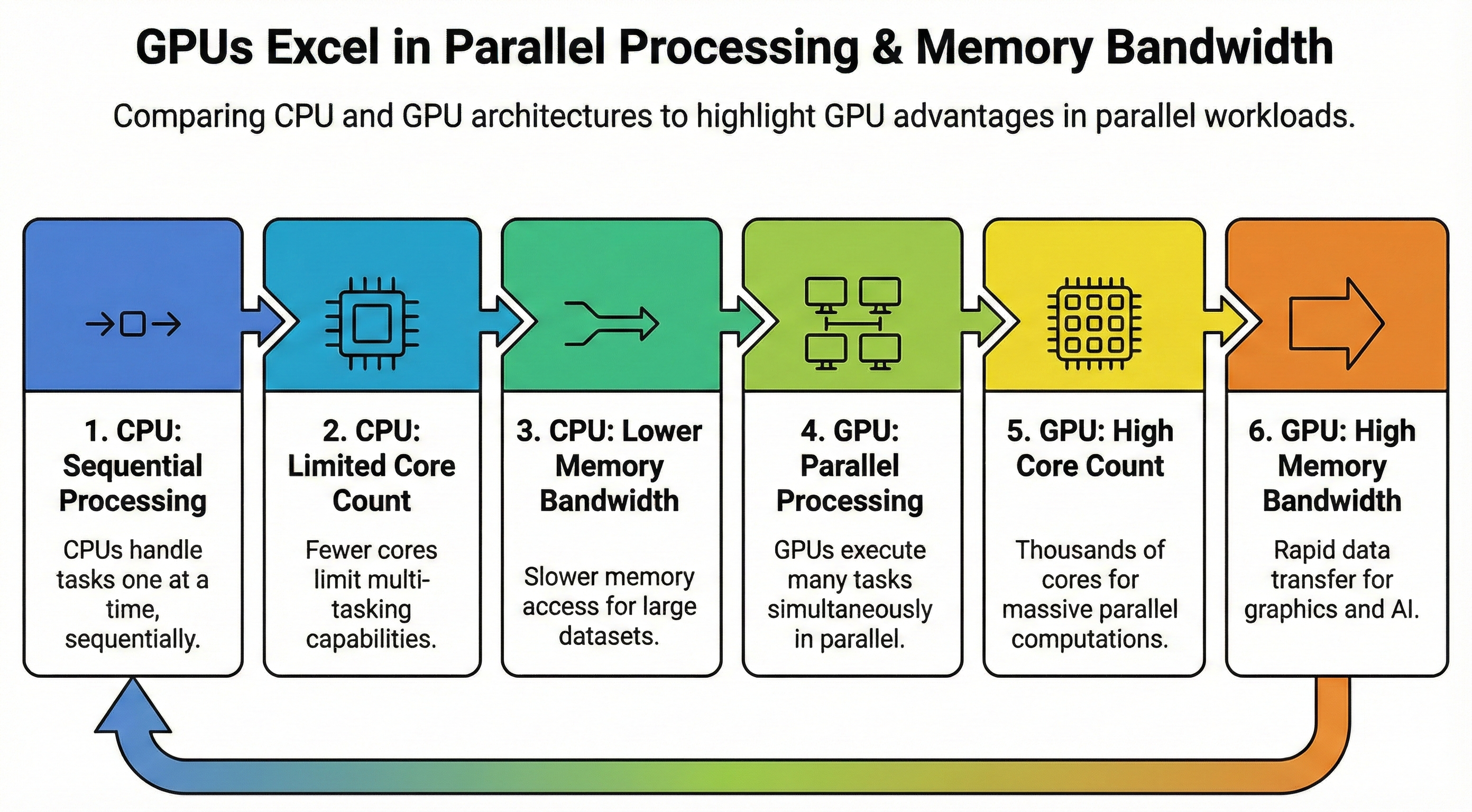

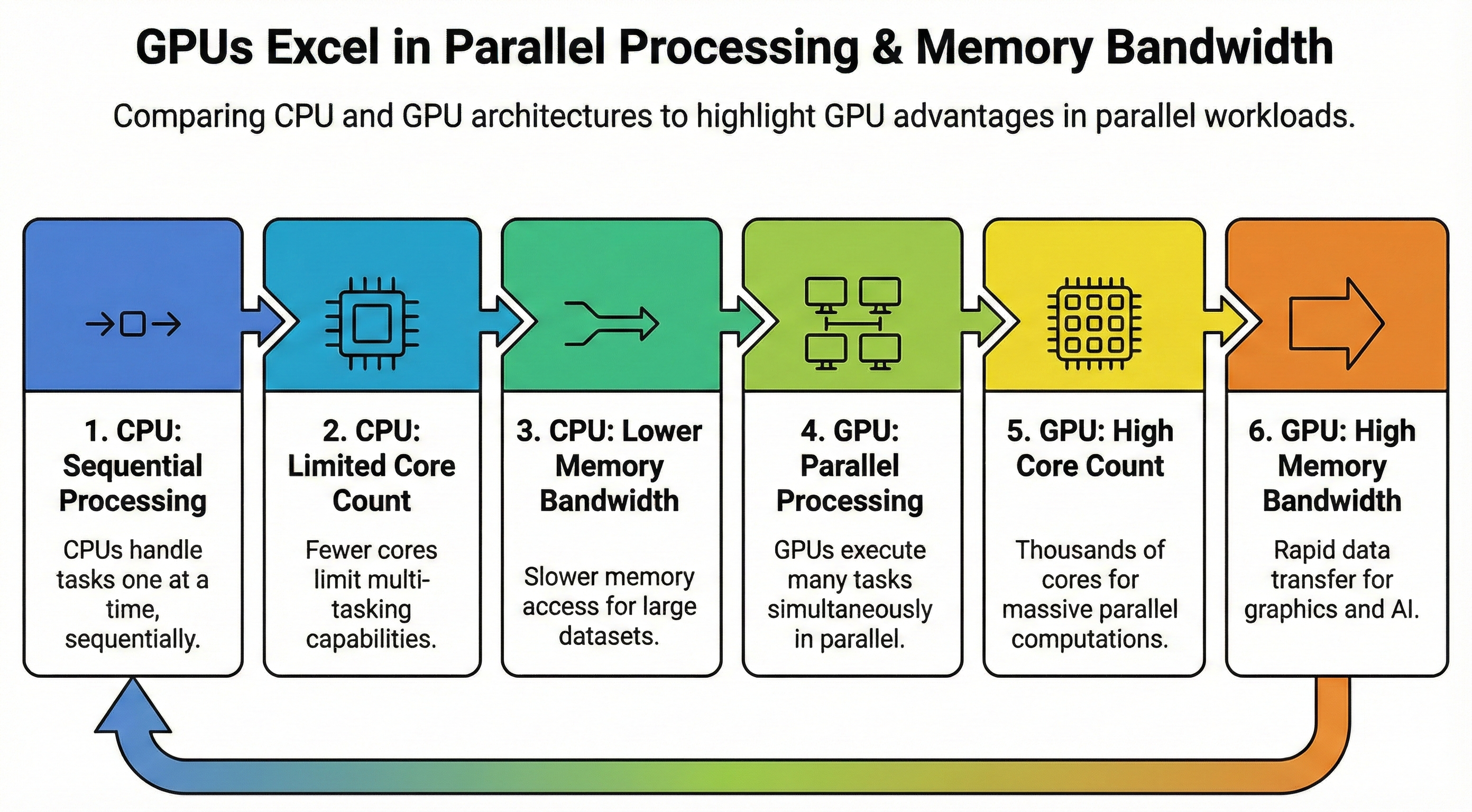

CPUs Are Not Enough

- Optimized for sequential, general-purpose workloads

- 4–64 powerful cores but limited parallelism

- Inefficient for large-scale matrix multiplications

How GPUs Accelerate Matrix Operations

- Thousands of lightweight cores for massive parallelism

- High arithmetic throughput for linear algebra

- Significantly faster training and inference

- Reduces time-to-result for deep learning workloads

GPU Bandwidth Enables Fast Inference

- High-speed GDDR7 Memory (e.g., up to 1.8 TB/s on RTX Pro 6000 Blackwell Server edition)

- High-bandwidth HBM (e.g., up to 3 TB/s on H100)

- Rapid weight/activation access for low-latency inference

- High throughput per watt and per dollar

- Efficient batching for real-time and large-scale deployments

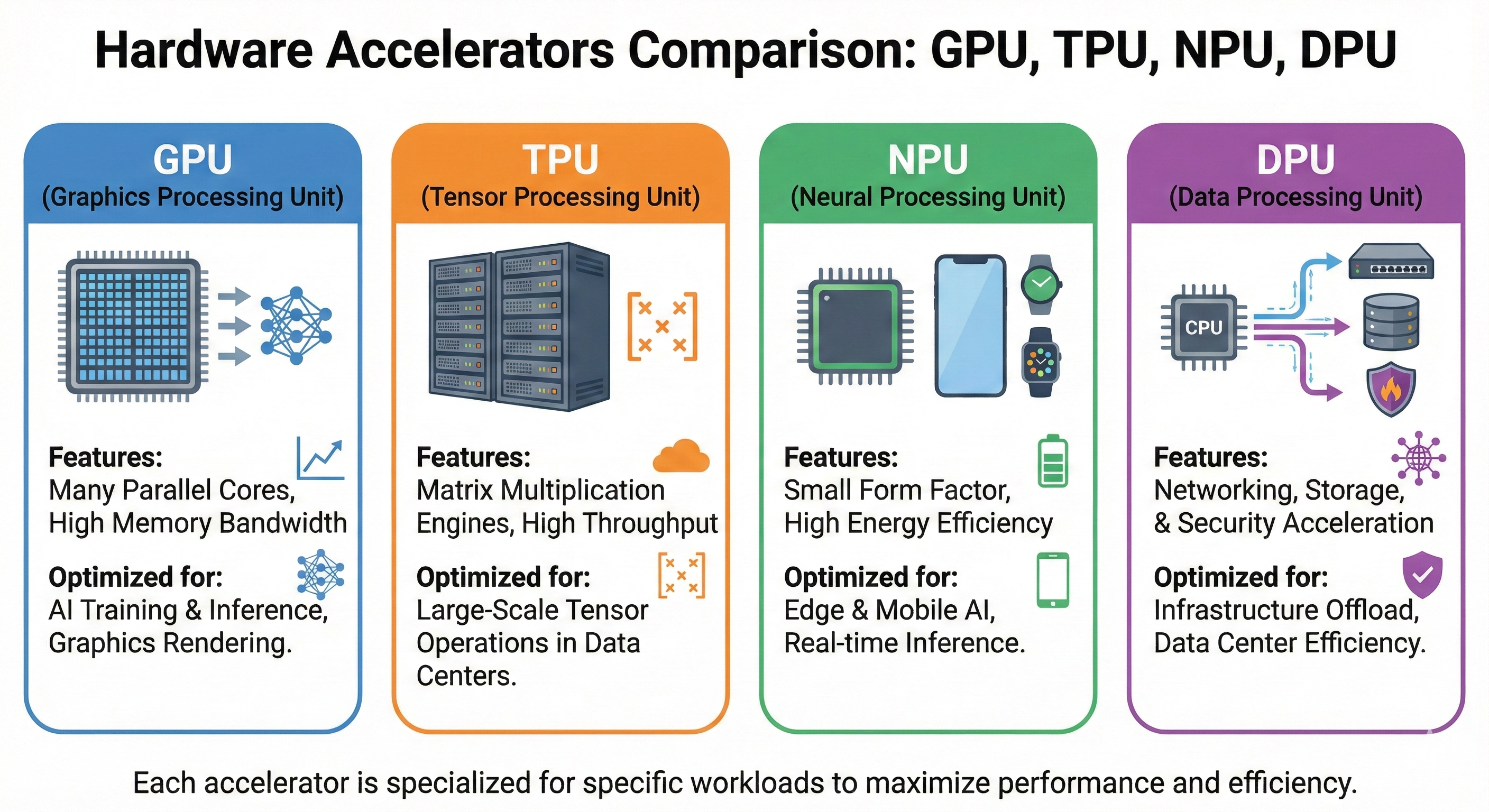

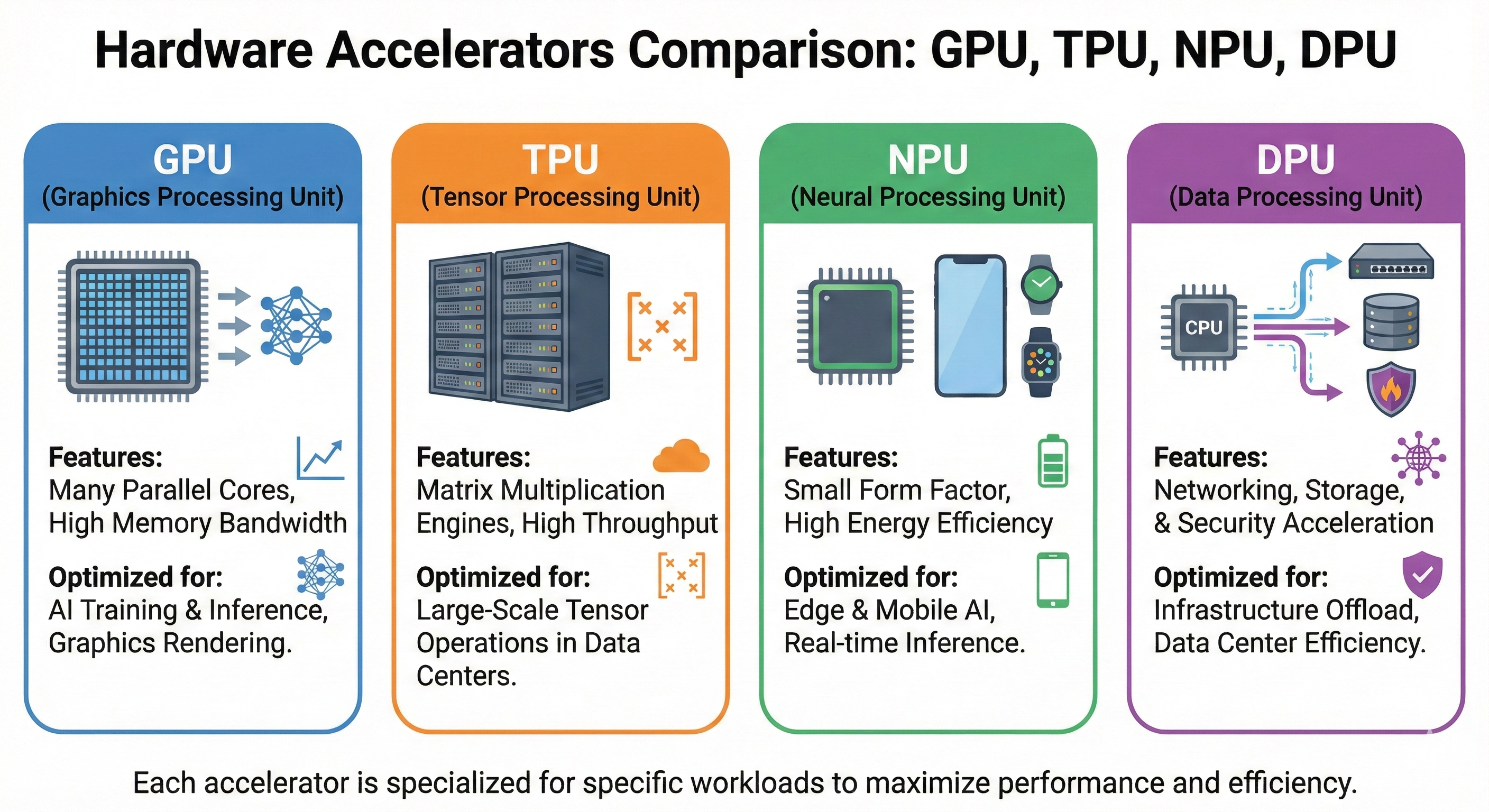

GPUs vs TPUs vs NPUs