Data Pipelines and the ML Workflow¶

What Are Data Pipelines?¶

Data pipelines are automated systems that move data across stages — collecting, transforming, enriching, and delivering it to downstream systems such as ML models, analytics engines, or storage layers.

- Key Characteristics

- Ingestion – Logs, events, databases, APIs, sensors

- Transformation – Cleaning, normalization, enrichment

- Routing – Moving data to warehouses, feature stores, ML systems

- Automation – Scheduled or real-time workflows

- Scalability – Handles massive, heterogeneous datasets

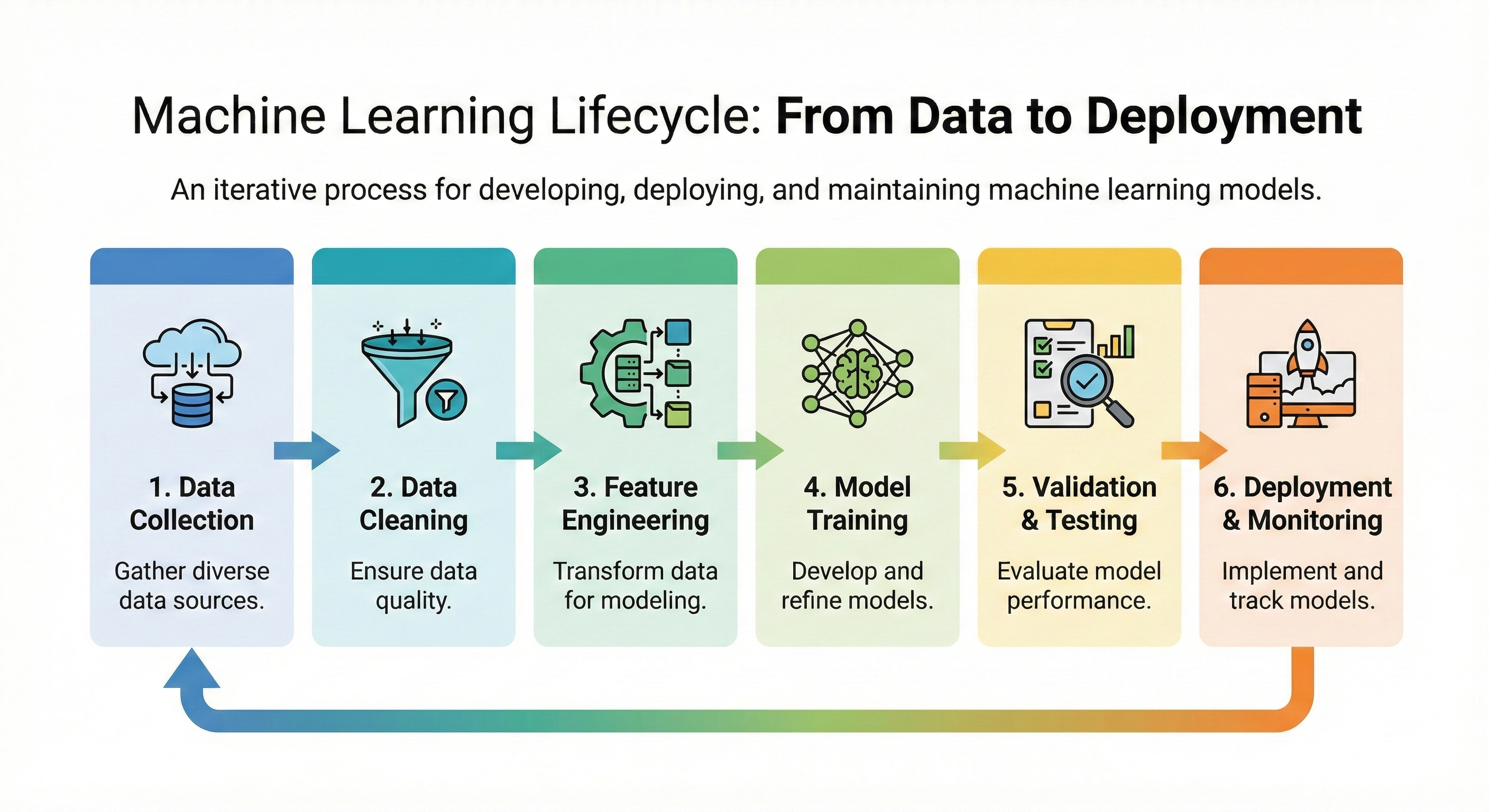

The End-to-End ML Workflow¶

- They connect the entire lifecycle:

Data Sources → Feature Store → Model Training → Deployment → Monitoring → Retraining

Where This Applies in Modern AI Systems¶

- This workflow supports:

- LLM training and fine-tuning

- Recommendation engines

- Fraud and anomaly detection

- Predictive maintenance

- Real-time inference applications

- Retrieval-Augmented Generation (RAG) pipelines